In the world of SEO, you've probably heard of PageRank, Domain Authority, and all sorts of other metrics which attempt to quantify how well a website is ranking in search engines.

There is, however, one metric that the majority of people don't know about – the EAT score.

The EAT score is an analytics-focused version of the word-of-mouth popularity index and is based on search volume, organic traffic, referring domains, and commercial intent — this helps to determine your SERP quality.

What is EAT?

It's short for expertise, authoritativeness and trustworthiness.

Haven't marketers tried to present expertise authority and trust all the time?

Why is it important now?

What has changed so that this is a big thing that we're talking about in SEO?

And I think a good starting point is the medic algorithm update back in 2018.

It had a relatively big impact.

There were a lot of websites that had a big improvement a lot that had a big decline.

And as always, Google wasn't going to tell us anything about their core algorithm updates. We got the usual message from them.

But they did say read our raters guidelines and that was a big clue.

The rater guidelines do say a lot about E-A-T so people were digging into it

The other interesting thing is the quality rater guidelines were updated a week before the medic update.

And people, I think, incorrectly put two and two together and started digging into the new things in the search quality radar guidelines and they thought the key to medic was some of the new stuff in EAT.

One of the new things in the rater guidelines was having raters do research on the authors of content and that turned into a lot of people recommending adding bio boxes and author information to blog posts.

This is still a good thing to do but that didn't appear to have any effect on medic.

Turns out, there was a correlation study that looked at what types of things may have been involved in medic and author profiles, social links and credentials were weakly correlated and even negatively correlated with which websites improved with the medic.

Turns out the people who did deep dives into the search quality rated guidelines had successful recoveries for the medic update.

And one of the things that came out of it was a big surge of interest in eating EAT.

E-A-T was added to the quality rater guidelines back in 2013 but it wasn't until 2017-2018 that people started talking about it.

There was a lot of myths and confusions that surfaced after medic.

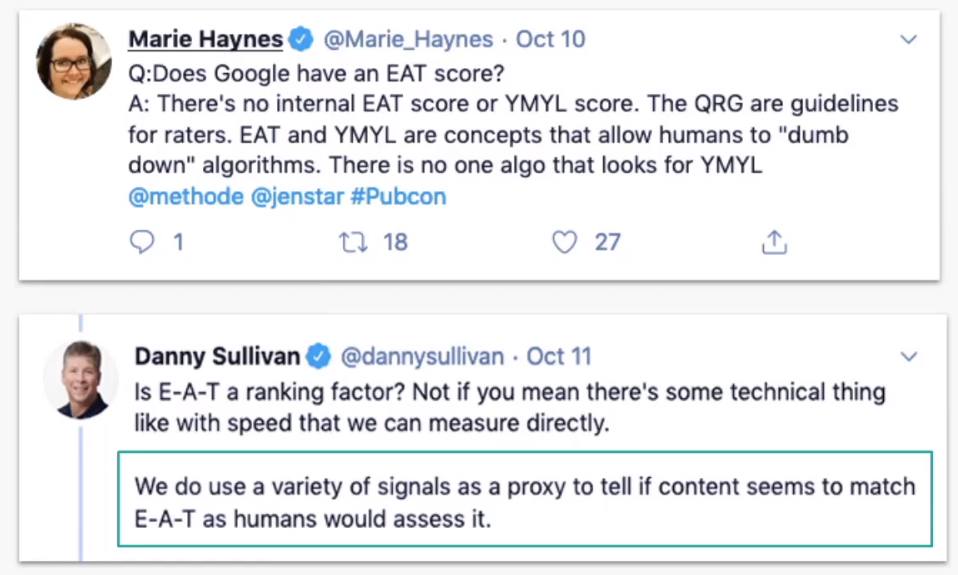

People started to think that there was an EAT score or there was a YL score, or EAT itself was a ranking factor.

Well, the answer is NO.

EAT is not a ranking factor.

Danny Sullivan, at google, in the above tweet summed it up perfectly by saying Google uses a variety of signals as a proxy to tell if the content seems to match EAT as humans would assess it.

Or in other words, google's trying to tailor its algorithms to match what people think about expertise, authoritativeness and trustworthiness and that's a really good way, to sum up, what's been going on.

Search Quality Rater Program

To talk any more about EAT'ing we got to first talk about the search quality rater program.

Who makes sure google search results are good?

The answer is around 10,000 quality raters. Google has a program where they hire contractors to rate web pages, search result features they're thinking about adding their current search results and search results that would be affected by potential algorithm updates.

And their job is to generate data for improving search results.

According to Google in 2020, they had over 380,000 search quality tests by those of roughly 10,000 quality raters. And out of that, they had over 17,000 live traffic experiments and they went live with 3600 algorithm changes in 2020.

So when Google says they're changing their algorithm every day, that's true about 10 changes a day on average. And this program has existed since 2003 possibly.

In the webmaster world, there this is the earliest instance of someone noticing traffic in their referral logs.

It says it might have been from an evaluator program at google or something.

So it may have been as early as 2003 that they started doing this.

The big takeaways are, it's a quality assurance program.

Google wants to know if the changes they want to make to the SERP are going to improve search results.

Before any search algorithm updates are going to be tested on live traffic they go through the search quality raters and they see if high scoring pages are going to be promoted or demoted by this potential change.

And if the potential change doesn't improve search results, according to the raters, it's not going to go live. It'll go back to the search engineers working on that project.

The most important takeaway here is if your website resembles highly rated websites according to search quality raters you will likely be favoured by future algorithm updates.

And the search quality rater guidelines themselves are a document that's updated once or twice a year. It's a handbook for these quality raters.

It gives them lots of instructions on how to use the software how to understand what the goals are what quality is and how to evaluate web pages based on subjective criteria, it's very subjective.

A lot of things that machines are very not great at evaluating on their own.

And EAT was added to this document in 2013.

Takeaways from the rater guidelines are that it's written for rater's not SEOs.

So it's a document for raters to do their job of assigning values to search results. It's not about telling SEO pros what's in the algorithm right now.

A lot of people think that what's in these quality rated guidelines is things that will end up in the algorithm eventually but it's hard to predict when it will happen or if it's already happened and how important those changes are going to be.

What we should take from it is, these are the things that google is generally focused on right now.

And it's a very important reminder is the quality scores from the program do not affect life rankings.

So, if our websites show up in the program and the rater rates them, that score will have nothing to do with our current live rankings.

How does the rating system work?

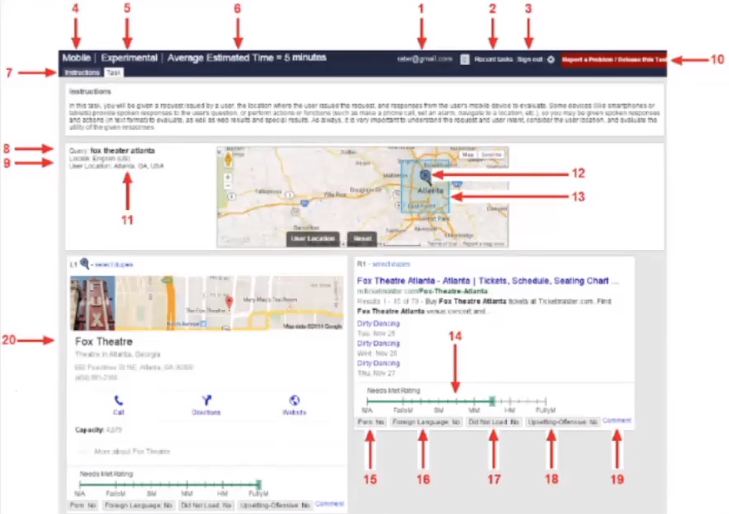

Rater's are assigned tasks. The interface is like this:

Blurry screenshot?

This is one of the few screenshots that's been leaked from the program and it looks a lot like a search engine results page.

And the writers are given a query with a context. So what country the query is in what language they're rating in the location in case it's a local query and the search results that appear on the SERP.

Then the raters are expected to determine what the user intent of the query is and then assign two scores.

One for needs met and one for page quality.

We'll also talk a little bit about that in this post later.

And the guideline document gives lots and lots of examples of how raters should be scoring search results based on a query.

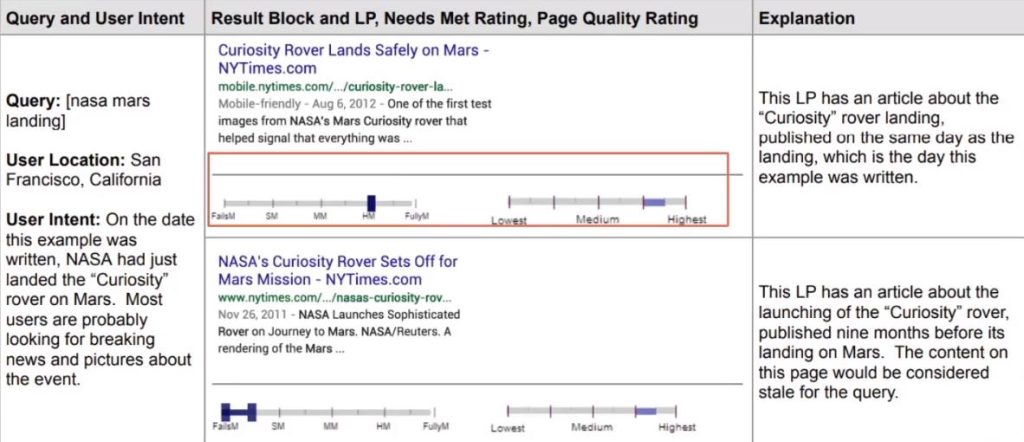

This screenshot's an example of how to score pages for the query NASA mars landing.

And the highlight box here is about these two sliders that indicate how a rater would score the two documents.

They're not given a number. The raters are giving search results a range of values between fails to meet, fully meets, highest and lowest.

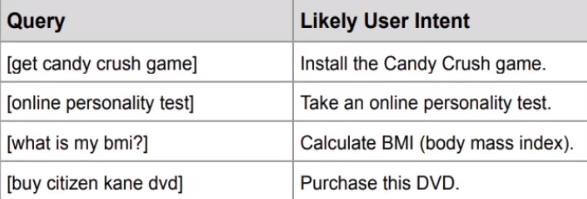

So raters have to assume user intent and the guidelines give a few examples of what intent should mean to them.

Queries according to google tend to be around doing something.

Which could be a device action like opening an app on a phone, going to a website or going to a location.

And the raters have to determine if the results are helpful.

Did search results fully meet the needs of the query or did they fail to meet the needs of the query completely?

And they also have to determine page quality and here's where we get into E-A-T.

E-A-T

Page quality is based on a few different things, the purpose of the page is what is it supposed to do. Is it's supposed to inform users, get users to buy something and so on.

The expertise, authoritativeness and trustworthiness of the website, the authors that wrote it, the people responsible for the website and the main content quality which is what we would expect.

But EAT is not just about text quality as we thought about in the past.

It's about overall quality.

The whole thing.

We wrote another article on EAT and how to concentrate more on it to be safe from any Google algorithm updates affecting your website. Ignore Google's New Search Update. This Is What You Should Do Instead

The raters are asked to look at who the authors of the content are, who the website creators are, what is the EAT of each of them, off-site factors that indicate what the reputation and qualification of the writers are.

So the raters will look at search results for the writer, the brand and look for any kind of reputation information they can find. What are people saying about the creators of the content?

And then, they're also looking for the editorial and content review processes of the website that they're rating.

Do they want to know how did this content come to be? What are the standards for it?

And this has not always been the case. EAT has kind of been around with us the whole time.

We're marketers, we want to show expertise authority and trust.

How's google tried to find that in the paper in the past? Classic expertise was about content quality back in 2011 during the panda days.

Google was very interested in identifying thin or shallow content generated by a machine so like spun content, doorway pages, duplicate content and so on.

A lot of that stuff went into the panda update.

These days expertise is really about usefulness.

Google wants to know when the content's beneficial to the user.

Does content actually help people out? Does the purpose of the content match the intent? does the content actually answer a query?

Direct answers to queries are often showing up in search results as the featured snippet text.

Google also wants to know about how accurate content is and evidence of expertise which is where author bio boxes come in.

One example from year 2021 was passage-based indexing.

If a page had a direct answer to a query buried in a very large document, google would give it a boost.

So they're getting much better at actually identifying when text answers a query directly.

How to improve expertise?

Well, Google recommends having subject matter experts write content.

Which is a good idea because an expert is going to know which parts of a topic are important for users to know which parts are not very important.

They're going to be able to write about stuff that will set your content apart from all the other competitors on the SERP.

And not only do we have to have experts write content we have to show users that the writer is actually an expert

That comes with the author's biographical information.

We should also simply and directly answer the query. Which means not beating around the bush not inflating word count with fluff text.

It's going to be more about quality, not quantity.

One of the things that a lot of SEOs recommend is to do skyscraper content.

Where we should look at who all of the competitors are for a keyword and then copy all of the things that they're talking about in a very large blog post or article.

That's not always a winning strategy.

Oftentimes, a shorter but more to the point page is going to rank that in positions one and two. Another part of expertise is citing sources and linking to other high EAT content.

Google wants to send users to pages that are high quality but they also want users to go to a high-quality page after that and a high quality page after that.

Linking to the good stuff is going to become important.

Authoritiveness

Authoritativeness in the past was a lot about backlinks.

Back in the old days, before the Penguin algorithm, Google had a very very big problem with paid links spam, very targeted anchor text and a lot of low-quality guest blogs.

That's not really the case anymore.

Google is actually more selective about which links they're going to count. They're putting more weight on links that are coming from high trust and authority domains.

This can be news sites magazines, big blogs, government sites, educational sites or any kind of site that is already gonna be highly trusted and highly linked to.

Google's gonna be looking at the contextual relevance of a link.

What does the surrounding text or the context of the link say about the page speak it's linking to.

There was a patent that came out maybe 10 years ago that said that google was looking at the words before and after a link.

It turns out the keyword relevance of that didn't appear to be impacting rankings but it looks like google could be looking at the whole sentence or the paragraph that a link is inside of, to extract more information out of it

Google's also looking for multiple sites saying the same thing about a brand.

If multiple sites are linking to our brand saying that we're great at what we do, that's a consensus that google's going to pick up on and help us rank for keywords related to that.

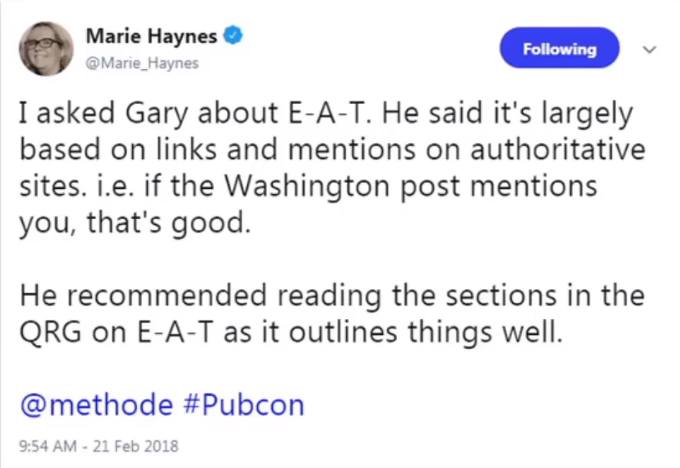

This screenshot is a report from Mary Haynes about Gary Isles or Gary Elish talking about E-A-T and he says it's largely about links and mentions on authoritative sites.

The example was if the Washington post mentions you that's good. If the New York Times mention you, that's really good.

So we want to get links from the biggest newspapers that exist.

The way to get those links is to do public relations and do outreach.

Check out our link building services and get the best SEO link building service for your website from our team at UnderWP.

We want to get links and mentions from those really good domains and to do that we need to create link-worthy content.

When doing pitches the author on the other side of the pitch email is going to look at what we send them and if it's not very good content they're not going to want to link to it.

One of the things that do get picked up a lot is original research.

A year or two ago, I did a research post on how often google ignores our meta descriptions.

Turns out, it's about 70%.

That post is still getting that figure quoted and still getting links from people citing the study.

Original research is a very good thing to do.

Something that's been working for important in our agency practice is doing scholarship dream job and contest giveaways.

If your brand is going to do a contest for free money, you can get a lot of attention from that and a few links.

Another thing we've seen work very well is having the brand sponsor local school's sports teams and conferences.

That's another easy way to get links from good domains.

Guest blogging still works but you should be more selective about who you do guest blogging with. The best sites aren't gonna ask for money.

As marketers, we can pitch things to the Moz blog and they're never gonna ask for money but they will be selective about which pitches they'll accept.

Trustworthiness

Classic trustworthiness was about identifying deception.

They really asked quality raters to look for cloaking malicious ads.

If a website was hacked, if there's malware, sneaky redirects and so on.

The above screenshot is from Matt Cutts, and these are the topics he's talking a lot about when he was still at Google.

Trustworthiness is now about harm reduction.

Google wants to make sure users are going to websites that are the least likely to cause harm, or in other words the most beneficial to them.

Google want to be sure that users are going to have a beneficial experience by landing on a page. Is that content really going to help them out?

And if the query is about health or wealth.

Your money or your life(YMYL) pages, that's extra important.

The quality rater guidelines say that for YMYL queries, the standards are much higher for EAT and Google are looking into how to identify the most accurate content for YMYL queries.

Accuracy and agreeing with the mainstream consensus is going to be important.

How To Improve Trustworthiness Signals

The ways to address trustworthiness now are going to be getting multiple high trusts and authority domains to say that we're good at what we do or getting positive mentions that are relevant to what we do.

Like authority, if lots of established newspapers are saying that we're great, that's a strong signal to Google that we could be trusted.

And like I said, it's important to agree with well-established medical scientific and historical consensus. Since medic alternative health sites have not been doing well in the SERPs.

Google really wants to make sure that users are landing on mainstream information for YMYL.

Queries reputation is also about getting reviews. We do have to try to get reviews from our customers and we should also protect our brand's reputation.

If we're doing things that are generating negative reviews we should stop and we should also respond to negative reviews on platforms that allow it.

One of the things that raters are instructed to look for is prominent contact information. Google wants to see customer service phone numbers and contact forms on websites.

And of course, a very big trust signal is being on Wikipedia.

It's pretty hard to get on. It's pretty hard to get a business on Wikipedia and if you can do that you're probably a very notable and trustworthy website.

How can we use this document and search quality rater guidelines and program to improve our SEO practice?

One thing to do is pretend to be a quality rater and to do that I'm asking all of you to read the entire search quality rater guidelines at least once.

It's about 172 pages. It's kind of boring, kind of dry but I strongly recommend that everyone do it at least once in their career.

And then after reading it perform the research process as if you're a quality rater.

But for the keywords that you want to rank for.

Look at your competitor's content, the competitive website. Look at their writers, their reputation, all of their E A T factors that google talks about in the guidelines and then do the same thing for your website.

And then think about who you get higher needs met and page quality scores. That is an important thing to do because Google's creating these algorithms in a way that's going to generate better scores in the quality rater program.

If we would get high scores, these algorithms are going to favour us.

In other words, we can pander to the algorithm if we do the types of things that are supposed to score highly and we can use that information to fill the gaps between us and our competitors.

Or, in other words, I'm recommending that we do a search quality rater guideline (SQRG) oriented audit.

We can upgrade our SQRG (Search Quality Rater Guidelines) practice by going beyond the typical crawl of the site.

Report on problems audit by looking at the whole website and addressing these eat things ahead of time in case they are part of the algorithm now or could be part of the ranking algorithm in the future.

Reading the search quality rate of guidelines is a big task and google updates them once or twice a year but we don't have to re-read them every time they're updated.

We usually get away with reading the digest of the changes in the document.

Or you can also subscribe to our blog newsletter and stay updated with any important Google updates or algorithm changes whenever they happen.

We usually cover the most important updates and also show you the effects that they can have.

The other thing we can do is user surveys.

The websites that we look at every day begin to appear normal to us and we may not be able to tell when there is something that we're lacking on those websites.

What we could do is ask our users directly.

Do they think that we are answering their question? Do they think that we're accurate? Do they trust us?

We can just straight up ask them E-A-T oriented questions as if they were quality raters themselves.

Now we can take that feedback and incorporate it into improving our websites in ways that we may not be able to think about from our usual point of view.

Final Words

There is a lot of SEO advice flooding the web. Most of it is terrible and incorrect.

The SEO advice coming from so-called SEO experts all over the world can range from plausible to completely ridiculous.

One example of this is the EAT score, which only makes sense in the mind of someone who doesn't understand much about search engines and how they work.

To clear this concept, we came up with this detailed article about how EAT was born and how it affects your SEO rankings.

If you liked this post, do comment below and let us know your thoughts.

I was extremely pleased to discover this page. I want to to thank you for this wonderful read!!

The inside view on raters guidelines was chilling. Did not know that Google had such a program which is still active to improve their search results. All i thought was it was the job of only Google algorithms.

Google sure is a mysterious company. Pun intended!